My "3.3 voltage" provided by MCP1700-33 on the heatermeter at the probe contact points measures 3.275v (a little low) measured with a Fluke Multimeter. The Heatermeter states my Bandgap referance is (BG349). I have no noise problems as this test was measured with a 12v battery source.

Where are the most probable sources of error that I might be able to tract down at this point. Is the fact that the 3.3 volt reference is little low a possible source? Is there a reason why a MCP1702-3302E was not used in design as it has a better voltage regulation accuracy of 0.4%?

The MCP1700 variant wasn't exactly selected for this application specifically, but came from being the part I had on hand because it had lower dropout voltage. It was also a part you could find at a variety of places, which was important because the design pre-dates the ubiquity of electronics components we're blessed with today. I'm sure there are much better linear regulators in the same price ballpark, as these parts are optimized for low quiescent current draw which is something we don't really care about in this always-powered application. I do think that the output voltage is good enough for our application, but if I were going to design the whole thing from scratch again I would definitely look at other options.

The calculated thermocouple output is dependent on the 3.3V regulator and the code expects the AVCC voltage to be exactly 3300mV, so any variation from that is going to cause a little error. The bandgap voltage is measured with AVCC as the reference, and then the bandgap is used as the reference when measuring the thermocouple amplifier output. Your BG349 means the bandgap is calculated to be 349/1023 * 3300 = 1125mV. A temperature of 25C is 125mV and we'd get the expected ADC reading of 113 (113.77). Because the 3.3V AVCC is actually 3275mV, the actual bandgap voltage is 349/1023 * 3275 = 1117mV and that same 25C reading is now 114 (114.59) leading to a temperature error of around 0.2C. The error can be corrected by changing the mV/C to 3300/3275 * 5.000 = 5.038mV/C.

All that said, the precision of the temperature measurement isn't too critical given all the other uncertainties in the system. Being consistently off by 0.2C isn't a big deal when the temperature inside the smoker can vary by 10-20C depending on where you measure the temperature, the state of the meat, if you use a water pan, etc.

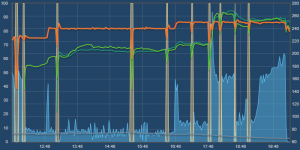

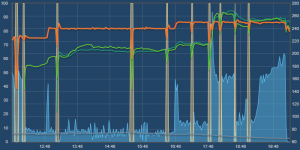

The more data I have to look at the more upset I get about why we can't get better temperature control with all this technology. I used to

advocate for the dome being the best place to measure the temperature but the testing was done without meat involved. I've since found that if I put a single rack of ribs (St Louis style) on my Big Green Egg, 225F at the dome can be as low as 180F an inch away from the ribs at grate level (45F error). I bumped up to 240F and later 250F the error was just 35F. As the ribs approached done-ness (about 1.5 hours left), the error inverted and now the temperature at the grate was 15F

above the dome temperature. At one point, one grate-level temperature was 8F above and another was 7F below the dome temperature-- an error of 15F between two things you'd expect to be the same. The whole time, HeaterMeter kept the temperature within 5F of the setpoint, but the meat experienced a range of temperatures spanning 60F! The ribs were delicious, smoked and cooked perfectly, but the question is

What was the actual temperature?